5.4 SENSOR TECHNOLOGY

![]()

INTRODUCTION |

A sensor is a measuring device for a physical quantity such as temperature, light intensity, pressure, or distance. In most cases the value delivered by the sensor can be any number within the measuring range. However, there are also sensors that only know two states, similar to a switch, for example fill level detectors, touch sensors, etc. The physical quantity in the sensor is usually converted into an electrical voltage and processed further by evaluation electronics [more...For the processing with a computer, the voltage with an analog-to-digital converter (DAC) must be converted to a number]. The interior structure of sensors can be highly complex, such as in ultrasonic sensors, gyroscope sensors, or laser distance measurers. The characteristic curve of the sensor describes the relationship between the physical measurement value and the value delivered by the sensor. With many sensors the characteristic curve is fairly linear, but one has to determine the conversion factor and the zero offset. For this, the sensor is calibrated in a series of measurements with known quantities. The ultrasonic sensor determines the distance to an object via the running time required for a short ultrasonic pulse to travel from the sensor to the object and back again. For distances between about 30 cm and 2 m, the sensor yields values between 0 and 255, where 255 (in simulation mode -1) is returned when there is no object in the measuring range.

In most applications, a sensor is integrated in a program in such a way that its value is periodically retrieved. This is called "polling the sensor". In a repeating loop, the sensor values are processed further in the program. The number of measurements per second (temporal resolution) depends on the sensor type, the speed of the computer, and the data connection between the Brick and the program. The ultrasonic sensor is only capable of about 2 measurements per second. The state of the sensors that have only two states can also be detected through polling. However, it is often easier to conceive of the changing of state as an event and to process it programmatically with a callback. |

USING POLLING OR EVENTS? |

In many cases you can decide whether you would prefer to handle a sensor via polling or events. This is somewhat dependent on the application. You can compare both procedures by connecting a motor and a touch sensor to the brick. Here, a click on the touch sensor should turn the motor on, and another click should turn it off again. Events are much smarter for this application because they inform you about the pressing of the touch sensor through a function call. You simply have to pass this function as a named parameter when creating the TouchSensor. With polling, on the other hand, it is necessary to use a flag in order to process only the transition from a non-pressed state to a pressed state. With polling: from nxtrobot import * #from ev3robot import * def switchMotorState(): if motor.isMoving(): motor.stop() else: motor.forward() robot = LegoRobot() motor = Motor(MotorPort.A) robot.addPart(motor) ts = TouchSensor(SensorPort.S3) robot.addPart(ts) isOff = True while not robot.isEscapeHit(): if ts.isPressed() and isOff: isOff = False switchMotorState() if not ts.isPressed() and not isOff: isOff = True

With events: #from nxtrobot import * from ev3robot import * def onPressed(port): if motor.isMoving(): motor.stop() else: motor.forward() robot = LegoRobot() motor = Motor(MotorPort.A) robot.addPart(motor) ts = TouchSensor(SensorPort.S1, pressed = onPressed) robot.addPart(ts) while not robot.isEscapeHit(): pass robot.exit() |

MEMO |

|

Sensors can be handled with polling or events. You must know both of the methods and be able to determine which one is more appropriate in a given situation. In the event model, you define functions whose name usually begins with “on”. These are called callbacks because they are automatically called by the system upon the occurrence of the event ("recalled"). You have to register callbacks using named parameters during the generation of the sensor object. |

POLLING AN ULTRASONIC SENSOR |

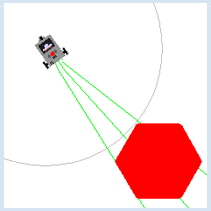

Preliminary note: If you do not have an ultrasonic sensor in your EV3 kit, you can use the EV3 infrared sensor instead. You must always poll a sensor if you need its measured data at a constant rate. Now you will take on a task where the robot, after you put anywhere on the floor, has to find an object (an aim or target) and travel to it. You use an ultrasonic sensor to detect a target, which is implemented similarly to a radar target recognition system. To learn about the properties of a sensor and to try it out, you should not shy away from writing a short test program that you will have no need for later on. It is advisable to write out the sensor values and to also make them potentially audible, since you would then have your hands and eyes free to move the robot and the sensor. You request the sensor values in a loop, the period of which adjusts itself, depending on whether you are in autonomous or the external control mode. # from nxtrobot import * from ev3robot import * robot = LegoRobot() us = UltrasonicSensor(SensorPort.S1) robot.addPart(us) isAutonomous = robot.isAutonomous() while not robot.isEscapeHit(): dist = us.getDistance() print("d = ", dist) robot.drawString("d=" + str(dist), 0, 3) robot.playTone(10 * dist + 100, 50) if dist == 255: robot.playTone(10 * dist + 100, 50) if isAutonomous: Tools.delay(1000) else: Tools.delay(200) robot.exit()

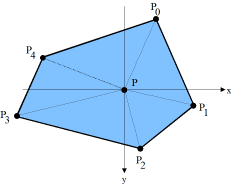

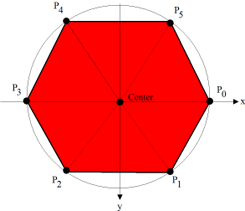

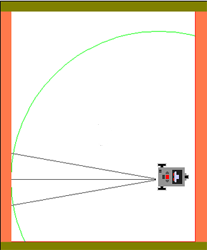

from simrobot import * #from nxtrobot import * #from ev3robot import * mesh = [[50, 0], [25, 43], [-25, 43], [-50, 0], [-25, -43], [25, -43]] RobotContext.useTarget("sprites/redtarget.gif", mesh, 400, 400) def searchTarget(): global left, right found = False step = 0 while not robot.isEscapeHit(): gear.right(50) step = step + 1 dist = us.getDistance() print("d = ", dist) if dist != -1: # simulation #if dist < 80: # real if not found: found = True left = step print("Left at", left) robot.playTone(880, 500) else: if found: right = step print("Right at ", right) robot.playTone(440, 5000) break left = 0 right = 0 robot = LegoRobot() gear = Gear() robot.addPart(gear) us = UltrasonicSensor(SensorPort.S1) robot.addPart(us) us.setBeamAreaColor(makeColor("green")) us.setProximityCircleColor(makeColor("lightgray")) gear.setSpeed(5) print("Searching...") searchTarget() gear.left((right - left) * 25) # simulation #gear.left((right - left) * 100) # real print("Moving forward...") gear.forward() while not robot.isEscapeHit() and gear.isMoving(): dist = us.getDistance() print("d =", dist) robot.playTone(10 * dist + 100, 100) if dist < 40: gear.stop() print("All done") robot.exit()

|

MEMO |

|

You can usually determine the sensor value through the repeated queries (polling) of a getter method (getValue(), getDistance(), etc.) When switching between simulation mode and real mode you have to adjust certain values, especially time intervals. You must also note that the sensor returns -1 in the simulation mode and 255 in the real mode if it cannot find the target. In simulation mode, the viewing direction of the ultrasonic sensor is determined by the sensor port used:

|

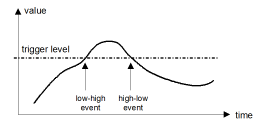

EVENTS WITH A TRIGGER LEVEL |

The sensors have a default value for the trigger level, but you can change this with setTriggerLevel().

from simrobot import * #from nxtrobot import * #from ev3robot import * RobotContext.setStartPosition(250, 200) RobotContext.setStartDirection(-90) RobotContext.useBackground("sprites/circle.gif") def onDark(port, level): gear.backward(1500) gear.left(545) gear.forward() robot = LegoRobot() gear = Gear() robot.addPart(gear) ls = LightSensor(SensorPort.S3, dark = onDark) robot.addPart(ls) ls.setTriggerLevel(100) # adapt value gear.forward() while not robot.isEscapeHit(): pass robot.exit()

|

MEMO |

|

The crossing of a particular measured value can be interpreted as an event. This is called triggering. Default values of the trigger levels:

The advantages and disadvantages of the event model, compared to polling, can be summarized as following:

|

EXERCISES |

|

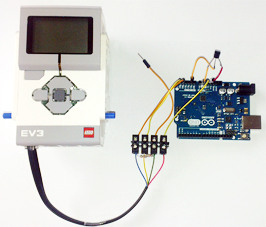

ADDITIONAL MATERIAL: ARDUINO-SENSORS |

Here the EV3 acts as I2C master and the Ardunio as I2C slave. The additional software support is already included in the distribution of TigerJython. The EV3 can be operated in direct or autonomous mode. For more information consult the website http://www.aplu.ch/ev3. |